CHAPTER 2

LITERATURE REVIEW

LITERATURE REVIEW

2.1 Introduction

The human biometric recognition of human face is a popular research topic in computer vision. Its motivation arises in commercial security system. Despite the fact that other biometric recognition identification methods such as fingerprints and iris scans may more accurate, biometric recognition of human face has always been a major research focus because it is noninvasive and it is natural and intuitive to users.

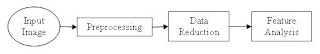

As the biometric recognition of human face is an application in computer vision, hence the standard methodology of the biometric recognition shown in Figure 2.1. In the preprocessing phase, the unwanted noise or irrelevant data is eliminated from the image. Others preprocessing steps include spatial quantization (reducing the number of bits per pixel) or finding regions of interest. The second stage involves transforming the image data into another domain to extract the significant features. Lastly, the extracts features are examined and evaluated

Figure 2.1: Standard Image Analysis Model

An understanding of the process involved in the existing face recognition system give clues to the construction of biometric recognition system. Thus this chapter reviews the relevant literature in face recognition, Principal Component Analysis (PCA) as data reduction method and Neural Network approach to recognize an unknown face image.

2.2 Biometric Recognition of Human Face Background

The intuitive way to recognize face is to extract the major features from a face and compare them with the same features on other faces. Thus majority of contribution made in biometric recognition of human face is focused on detecting the prominent features such as the eyes, nose, mouth and head outline. The recognition is considered success if there are relationships among the features.

[Brunelli, 1993] used template matching for biometric recognition of human face. The algorithm prepares a set of four masks representing eyes, nose, mouth and face for each registered person. To identify the unknown person in the image, the algorithm first detects eyes using template matching and then normalizes position, scale and rotation of the face in the image plane using the detected eye position. Next, for each person in the database, the algorithm places his four masks on their positions relative to eye position and computes the cross-correlation values between the four masks and the image. The unknown person in the image is classified as the person giving the greatest sum of the cross-correlation values of the four masks. This basic idea also used by [Doi, 1998] to propose a biometric recognition of face identification system for automatic lock control. The difference between the two (2) methods is that a new template matching method is proposed that was robust to lighting fluctuation.

Also [Sato, 1998] used neural network instead of template matching to recognize a face. In the neural network, output units correspond to registered persons and input units correspond to pixels of the input image. Sato et al. trained the neural network using three face templates for each person. In the biometric recognition of human face phase, the neural network computes an output vector from each test image. And, the unknown person in the image is classified as the person corresponding to the output unit that has the maximum value of the output vector if the maximum value is greater than a threshold value.

[Kawaguchi, 2000] proposed a new algorithm to detect the irises of both eyes from human face in an intensity image. They implemented the separability filter and Hough Transform to measure the fit of the pair of blobs to the face image. The algorithm then selects a pair of blobs with the smallest cost as the irises of both eyes.

The first introduction of a low-dimensional characterization of faces was developed by Kirby and Sirovich in 1987 and 1990. Turk in 1991 used eigenspace method instead of template matching. This method constructs an eigenspace for each registered person using sample face images. In the biometric recognition of human face phase, the test image is projected onto the eigenspaces of all registered persons to compute the matching errors. And, the unknown person in the image is classified as the person corresponding to the eigenspace giving the smallest matching error. It was reported that the eigenspace method was relatively robust to variations in position, scale and pose of the face if the eigenspace of each person was constructed by using face images with different positions, scales and poses.

These eigenfaces remain the topic of practical importance and interest from researcher to find the best performance. [Yambor, 2000] is analyzed PCA into automated eigenvector selection. He studied the combination of traditional distance measures to improve performance in the matching stage of face recognition. [Moon, 2001] investigates PCA using FERET database to examine the eigenfaces performance through the changing illumination, compression algorithm, varying the number of eigenvector and changing the similarity in the classification process. The automated eigenvectors selection or dimension reduction technique is a quite popular [Chichizola, 2005] proposed a new algorithm known as Reduced Image Eigenfaces (RIE) to improve the recognition rate.

These life of research is still continuous with [Yang, 2000] have demonstrated the successful result in face recognition, detection and tracking with represent the PCA in second order statistics of the face image. The eigenfaces approach is also used by [Watta, 2000] to analyse facial video data of an automobile when subjects driving in the vehicles. [Aravind, 2002] combined the eigenfaces with applied preprocessing technique of mean filtering, back ground elimination and local enhancement filter that showed a good recognition rate. [Lemieux, 2002] and [Ibrahim, 2004] also implement several image processing such as segmentation, deskewing, zooming, rotation and warping to observe the eigenfaces capability.

The capability of neural network in pattern classification enable it to be chosen in face recognition experiments. [Ahmad fadzil, 1994] develop of a biometric recognition of human face system (HFRS) using multilayer perceptron artificial neural network (MLP) and [Debipersad, 1997] using a discrete cosine transform (DCT) and neural network to recognize an unknown face. [Thomaz, 1998] combined the eigenfaces and Radial Basis Function Network (RBF) as a classifier in biometric recognition of human face system. It is also implemented by [Nazish, 2001] but used the backpropagation neural network.

1 comments:

Post a Comment