Artificial Neural Network (ANNs) has a large appeal to many AI researchers. A neural network can be defined as model of reasoning based on the human brain. The brain consists of a closely interconnected set of nerve cells or basic information-preprocessing units, called neurons. The human brain incorporates nearly 10 billion neurons and 60 trillion connections, synapses between them [Shepherd, 1990]. By using multiple neurons simultaneously, the brain can perform its functions much faster than the fastest computers in existence today [Negnevitsky, 2002].

2.4.1 Architecture

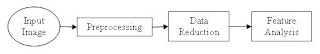

A multilayer perceptron is a feed-forward neural network with one or more hidden layers. Typically, the network consists of an input layer of source neurons that at least one hidden layer of neurons and an output layer of neurons (Figure 2.3). The input signals are propagated in a forward direction on a layer-by-layer basis. The backpropagation algorithm perhaps is the most popular and widely used neural paradigm. It based on the generalized delta rule proposed by research group in 1985 headed by Dave Rumelhart based at Stanford University, California, USA.

Figure 2.3: Feed-forward Neural Network